DHS maintains an inventory of unclassified and non-sensitive AI use cases. DHS and other federal agencies are required to create an AI use case inventory and make it publicly available to the extent practicable and in accordance with applicable law and policy.

News for the 2024 Inventory

- A broader inventory of DHS AI use cases

- Additional details about inventoried AI use cases

- Information about use cases with potential impacts on safety and rights

- Access to historical inventories from 2022 and 2023

Read more about the changes to the 2024 DHS Use Case Inventory.

Full DHS AI Use Case Inventory

The Full DHS AI Use Case Inventory lists AI use cases within DHS and provides detailed information about each one. This Inventory is available as a downloadable spreadsheet on the AI Use Case Inventory Library page.

Simplified AI Use Case Inventory

The Simplified DHS AI Use Case Inventory provides an overview for each AI use case, organized by DHS component, and includes the following information:

- Use Case Name, ID Number, and Summary: a brief description of the use case along with its ID number (which remains the same even if the name or summary changes).

- Topic Areas: a standard categorization of the use case’s topic, such as government services (includes benefits and service delivery), law & justice, and mission-enabling (internal agency support)

- Deployment Status: whether a use case is in pre-deployment, deployment, or inactive.

- Safety and/or Rights Impact: whether a use case is considered potentially impacting safety and/or rights of the public, and if so, additional information about identified risks and implemented mitigations and compliance with risk management practices in Office of Management and Budget Memo M-24-10.

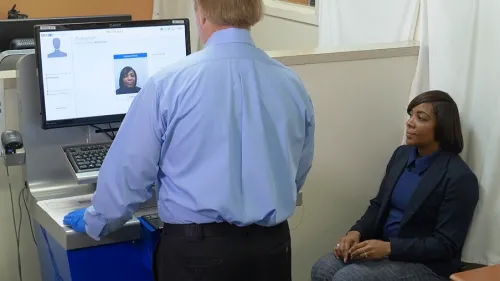

- Face Recognition/Face Capture (FR/FC): whether a use case involves FR/FC technology, and if so, more information about FR/FC at DHS.

DHS AI Use Case Inventory FAQ

DHS defines an AI use case as using a specific application of AI to address a specific, identified problem or requirement for DHS and that results in a mission-enhancing benefit from using AI.

The DHS AI Use Case Inventory includes all unclassified and non-sensitive AI use cases that can be publicly disclosed to the maximum extent practicable and in accordance with applicable law and policy. The inventory does not include (1) use cases within an element of the intelligence community, (2) use cases used within a national security system described in 40 U.S.C. § 11103(a), (3) research and development (R&D) use cases, unless the R&D use case is deployed in a real-world context, and (4) use cases implemented solely with a commercial-off-the-shelf or freely available AI product if the product is unmodified for government use and used for routine productive tasks (e.g. word processors, map navigation systems).

Whether an AI use case is safety- and/or rights-impacting is determined under Office of Budget and Management (OMB) Memorandum M-24-10 Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence (March 28, 2024), which implements portions of Executive Order 14110 Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (October 30, 2023). M-24-10 lists categories of AI use presumed to be safety- and/or right-impacting (in Appendix I of M-24-10) and provides definitions for “safety-impacting AI” and “rights-impacting AI” (in Section 6 of M-24-10). It is possible for a use case to be presumptively safety- and/or rights-impacting without satisfying either of the definitions. The definitions require determining whether the particular AI output serves as a principal basis for a decision or action.

The full definitions from M-24-10 read as follows:

“The term ‘safety-impacting AI’ refers to AI whose output produces an action or serves as a principal basis for a decision that has the potential to significantly impact the safety of: (1) Human life or well-being, including loss of life, serious injury, bodily harm, biological or chemical harms, occupational hazards, harassment or abuse, or mental health, including both individual and community aspects of these harms; (2) Climate or environment, including irreversible or significant environmental damage; (3) Critical infrastructure, including the critical infrastructure sectors defined in Presidential Policy Directive 2159 or any successor directive and the infrastructure for voting and protecting the integrity of elections; or, (4) Strategic assets or resources, including high-value property and information marked as sensitive or classified by the Federal Government.” (M-24-10, Section 6; see also Appendix I(1) which lists categories of AI applications that are presumed to be safety-impacting.)

“The term ‘rights-impacting AI’ refers to AI whose output serves as a principal basis for a decision or action concerning a specific individual or entity that has a legal, material, binding, or similarly significant effect on that individual’s or entity’s: (1) Civil rights, civil liberties, or privacy, including but not limited to freedom of speech, voting, human autonomy, and protections from discrimination, excessive punishment, and unlawful surveillance; (2) Equal opportunities, including equitable access to education, housing, insurance, credit, employment, and other programs where civil rights and equal opportunity protections apply; or (3) Access to or the ability to apply for critical government resources or services, including healthcare, financial services, public housing, social services, transportation, and essential goods and services.” (M-24-10, Section 6; see also Appendix I(2) which lists categories AI applications that are presumed to be rights-impacting.)

According to M-24-10, agencies must implement the minimum practices for each safety- and/or rights-impacting AI use case before it is deployed.

Office of Budget and Management (OMB) Memorandum M-24-10 Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence (March 28, 2024) implements portions of Executive Order 14110 Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (October 30, 2023).

The main requirements in M-24-10 are (1) designating a Chief AI Officer for DHS (DHS CAIO); (2) convening an AI Governance Board comprised of senior DHS officials to coordinate and govern issues related to the use of AI within DHS; (3) creating an updated inventory of DHS’ AI use cases with more details on AI use cases, including identifying use cases determined to be safety- and/or rights-impacting; and (4) implementing minimum risk management practices outlined in M-24-10 for safety- and/or rights-impacting AI.

According to M-24-10, agencies must implement the minimum practices for each safety- and/or rights-impacting AI use case before it is deployed. For AI use cases that were already deployed before M-24-10 requirements, agencies had to implement the minimum practices by December 1, 2024 or receive compliance extensions for individual use cases from OMB. Otherwise the agency had to stop using the AI use case.

Yes, DHS is in full compliance with required minimum risk management practices for safety- and/or rights-impacting AI use cases. DHS identified 39 safety- and/or rights-impacting use cases in its review of DHS AI use cases. Of those, 28 were deployed and 12 were in pre-deployment as of December 1, 2024. Of the deployed use cases, 23 cases comply the minimum practices, and OMB approved compliance extensions for the 5 remaining use cases (see FAQ below, “What does it mean if a safety- and/or rights-impacting use case has an extension?”.

Safety- and/or rights-impacting AI use cases that are not yet deployed are not required to fully implement risk mitigation practices. This category includes AI use cases that are in initiation or development and/or acquisition. Once DHS begins to implement an AI use case, the use case must fully comply with all minimum practices in M-24-10. DHS will continue implementing the minimum practices for its safety- and/or rights-impacting AI use cases before deployment.

DHS uses Face Recognition and Face Capture (FR/FC) technologies across a range of settings. Many of these involve travel, both within the United States and for people entering and leaving the country.

Face recognition and face capture technologies can help enhance security and speed up screening processes, while protecting privacy, civil rights, and civil liberties. In some limited cases, FR/FC technologies are also used in support of authorized law enforcement missions.

The DHS 2024 Report on Use of Face Recognition and Face Capture Technologies provides additional information about use of FR/FC at DHS, including highlighting eight FR/FC uses at DHS. We chose these eight use cases based on frequency of use and public interest.

All Face Recognition and Face Capture (FR/FC) technology is tested both prior to operational use and at least every three years during operational use. DHS Science and Technology (S&T) oversees testing and evaluation based on International Organization for Standardization/ International Electrotechnical Commission (ISO/IEC) standards and technical guidance issued by National Institute of Standards and Technology (NIST). DHS S&T applies laboratory, scenario, and operational testing to cost-effectively characterize technology performance and, when feasible, disaggregate performance by user demographics such as gender, age, and skin tone.

The DHS 2024 Report on Use of Face Recognition and Face Capture Technologies provides additional information about use of FR/FC at DHS, including S&T’s testing and evaluation of FR/FC technologies.

The Office of Budget and Management (OMB) approved compliance extensions on a case-by-case basis for safety- and/or rights-impacting AI use cases under Memorandum M-24-10 Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence (March 28, 2024). Agencies could request an extension of up to one year for a particular AI use case that could not feasibly meet the minimum practices in M-24-10 by December 1, 2024. The extension request had to include a) a detailed justification explaining why the agency could not achieve compliance for the use of AI in question by December 1, 2024, b) the practices the agency has in place to mitigate the risks from noncompliance, and c) a plan for how the agency will come to implement the full set of required minimum practices from this section.

DHS received OMB approval for compliance extensions for 5 use cases that DHS determined are safety- and/or rights-impacting. These use cases are (1) ICE’s Video Analysis Tool (DHS-172), (2) CBP’s Babel (DHS-185), (3) CBP’s Fivecast ONYX (DHS-186), (4) CBP’s Passive Body Scanner (DHS-2380), and (5) CBP Translate (DHS-2388). For more information about these extensions, read the accompanying blog post from CAIO Hysen.

The Office of Budget and Management Memorandum M-24-10 Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence (March 28, 2024) outlined certain categories of AI use cases that are presumed to be safety- and/or rights-impacting, unless an agency’s Chief AI Officer determines otherwise (see M-24-10, Appendix I). As DHS reviewed its AI use cases, the Chief AI Officer determined that 24 AI use cases in these presumed safety- and/or rights-impacting categories did not satisfy the M-24-10 definitions for either safety- or rights-impacting AI.

For example, ELIS Photo Card Validation via myUSCIS (DHS-189) supports beneficiaries submitting an e-filed I-765 via myUSCIS to apply for employment authorization. These applications include a digital passport style ID photo of the applicant, which will be printed on their Employment Authorization Document (EAD) card if the application is accepted. The use case determines whether a user-uploaded ID photo is suitable for use on an EAD card, and it notifies the submitter if it detects a potential quality issue with the photo. The use case is related to determining access to Federal immigration related services through biometrics – a presumed rights-impacting category listed in M-24-10. However, this use case only provides a real-time alert to the submitter that their photo is of insufficient quality for card production. The submitter may choose whether to resubmit a photo of sufficient quality, and USCIS’s ultimate adjudication of EADs is not dependent on this use case. As a result, the DHS CAIO determined that this use case does not satisfy the definition of rights-impacting AI in M-24-10.

The Simplified AI Use Case Inventory includes information about each use case that received this determination from the Chief AI Officer.

The Full DHS AI Use Case Inventory is available as a downloadable spreadsheet and the Simplified DHS AI Use Case Inventory is available in webpage format. The Simplified Inventory provides a streamlined overview of each AI use case inventoried in the full inventory, as well as additional details about face recognition/face capture AI use cases, and some more information about M-24-10 compliance that is not easily captured in the spreadsheet format of the Full DHS AI Use Case Inventory. For more information, see the description of the Simplified AI Use Case Inventory.

DHS assigns each new AI use case an ID number that can identify a use case over multiple years, even if its name changes. Some ID numbers are associated with use cases that were never ultimately developed or use cases that were consolidated with another use case before being reported. ID numbers are not reused once assigned.

DHS Headquarters is a term used by DHS personnel to describe the collection of DHS organizations that are not considered a Component. For example, this term is used informally to describe offices within the Management Directorate (MGMT) and/or other offices like the DHS Privacy Office and the DHS Office of the Officer for Civil Rights and Civil Liberties. DHS Headquarters is no longer listed as a DHS entity in the DHS AI Use Case Inventory because it is an informal term. Previous versions used the term to list a few use cases in MGMT that should have been listed under MGMT in the inventory. This is corrected in the 2024 annual update of the DHS AI Use Case Inventory. Additionally, other offices that are covered by the term DHS Headquarters, including the Office of Strategy, Policy, and Plans, do not have AI use cases. If they do in the future, they will be included in the DHS AI Use Case Inventory.

Some AI use cases were included in previous versions of the DHS AI Use Case Inventory but are no longer used by DHS. These use cases, also known as retired or decommissioned use cases, are included in both the full and simplified inventories, but they are labeled to show that they are no longer in use. DHS is keeping these in the inventory for transparency and in accordance with requirements for agency use case inventories in Office of Management and Budget Guidance for 2024 Agency Artificial Intelligence Reporting Per EO 14110 (August 2024).

DHS completes one main update to the DHS AI Use Case Inventory per year in line with annual guidance from OMB and makes other revisions to specific use case information as needed between annual updates. DHS provides a log of those revisions on the Simplified AI Use Case Inventory. The downloadable Full DHS AI Use Case Inventory also identifies revised data fields between annual updates.

The most recent annual update was published on December 16, 2024.

Other questions about the AI Use Case Inventory? Please contact DHS at AI@hq.dhs.gov.